Visual effects supervisor Kim Libreri explores the emerging trends in the industry and looks ahead with 12 predictions.

Kim Libreri looks at the current trends and projects the future paths of the vfx industry.

1. We will build a better virtual human

Recently we have begun to see a stream of movies that are using virtual humans to represent principle characters. Each movie has chosen a unique solution to the problems that animating convincing human performances provide. With The Incredibles, Pixar provided a heavily animated and stylized approach to superheroes. In complete contrast, Sony Pictures Imageworks deployed a very technological motion capture-based approach to creating the near photorealistic humans for The Polar Express.

Now that we have sub-surface scattering, measured skin and material shading models, real world sampled lights and sophisticated hair styling and rendering systems, I am absolutely convinced that we can render totally photorealistic still renditions of digital doubles that are indistinguishable from a real actor. Its when things start to move that the problems appear. Human beings dont move the same way that machines do.

The complex nature of the human skeleton and muscle structures mean that our movements are not smooth, linear or easily defined. This has made creating realistic animated human beings very difficult without using motion capture, and even that has a long way to go before we see perfect animated skeletal reconstructions. In addition, it is not practical to have a movie that is purely based on motion capture; there are always cases where a real human cannot achieve a desired performance and the skills of an animator are required.

What is needed is a system that allows an animator to drive a human skeletal in a very gesture/pose based way and let the computer fill in all the nuances of joint offsets and non-linearity. Animators are the souls of the digital actors and should not be burdened with the concepts of biomechanics.

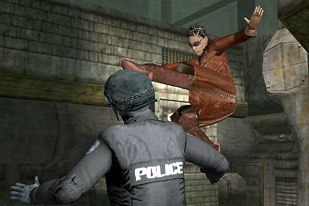

We created simulated human forms for the Burly Brawl scenes in the second and third Matrix movies, but the process we went through was extremely complex and time-consuming. This will become much easier as animation systems evolve that are able to recreate the subtleties of human movement. By applying the data (and lessons learned) from motion capture sessions onto the tools that animators currently work with, we will have the technology to create realistic virtual human beings from scratch.

2. We will be able to create believable facial animation

We will also learn to do realistic human facial animations, but this will take a lot of work. The human face is immensely complicated and has many moving features, which are not easy for a computer to define. At the moment we are able to do motion capture of an actors facial expressions, but we still cant even transfer that data to another characters face in a simple and realistic manner. Some good first steps have been made in the area of realistic facial animation: for example, I thought that ILM did a good job on The Hulk, Imageworks deployed an interesting MoCap solution for The Polar Express and, of course, we developed our own universal capture system in the Matrix sequels for Hugo Weaving, when creating the growing army of Agent Smiths. But I expect to see dramatic progress in this area in the coming years.

Sky Captain (left) and I, Robot display the advances in the land of digital environments. Sky Captain image TM & © Paramount Pictures. I, Robot image and © 2004 Twentieth Century Fox. All rights reserved.

3. We will be able to create synthetic massive environments

With the Star Wars prequels and more recently in Sky Captain and the World of Tomorrow and I, Robot, we have seen scenes that are almost completely shot on a bluescreen stage. This kind of work requires fully synthetic environments, environments that are very costly if they have to be totally photorealistic.

Creating a digital city, for instance, is an extremely labor-intensive task right now. For the Matrix movies, for instance, we had to do a very careful previs of every detail of every shot in order to be able to understand what we needed to fabricate. That was a lot of work and few productions can realistically invest that kind of time and effort. We will soon see software that will create massive environments in a much more automated fashion. An application like this will generate every building, tree, road or other element and would accurately reproduce the interaction of various lighting conditions, how the light hits each building, how it reflects off various different surfaces, how the light reflects back onto nearby objects.

All of this will require more computational power than any one machine can provide, but will create tremendous flexibility for vfx work. I can see technology like this being a lifesaver for those last minute shot requests that we often seem to get right at the end of post-production.

4. Pipeline tools will become standardized

This may sound like an obvious prediction, but it will have significant effect on vfx work. At the moment the larger facilities invest hundreds of person-years in developing their own in-house pipeline tools. This is uneconomical and totally unrealistic for smaller shops. I expect to see off-the-shelf applications for things like tape backup and data migration, or things like managing large scenes with packages like Maya, mental ray or RenderMan. As I mentioned above, I also expect to see standardized tools for creating virtual humans, as well as things like full synthetic city and large-scale environment creation software.

This is a business like any other: we will get these tools when the large software vendors see a profit in it. That, I predict, will come from the crossover with the games industry. Once there are two markets for the same tools, the prospects for profitability improve significantly.

Another example: there should be a standard for making and managing review sessions. Some companies like Iridas, with whom Ive worked who make the best daily system on the market are starting to bring out tools for managing the process (slates, automated file conversions, editorial clips etc.), but this is just the start. We built our own front end for this with our FrameCycler DDS system, but, as I said, smaller facilities rarely have the resources to build things like this for themselves. Soon they wont have to.

Advances in digital vfx animation, as in films like The Day After Tomorrow, will mean far less physical effects work.

5. Advances in digital VFX animation will mean far less physical effects work

We saw the quality of the dramatic digital effects in The Day After Tomorrow and were going to see a lot more of that. As our understanding of the physics of explosions, or waves, things like car crashes grows, we will rely less on crashing real cars or blowing up real buildings. Initially this will create imagery that has never been seen before, but ultimately once the technology becomes common place, the result will be production cost savings and smaller companies and independent filmmakers will have access to much more complex vfx than they have not had to date.

And there is another important advantage in this: digital effects are much more directable than physical ones. In Matrix Reloaded, for example, we created a completely digital flaming tsunami to chase Neo. Its hard to tell real flames to behave. That problem is largely eliminated when we go digital.

6. Off-site rendering will be the norm

At the peak of the Matrix work we were running 2,500 CPUs at ESC. That is a massive cost overhead and it should be unnecessary for new facilities. I expect to see a strong trend toward off-site rendering so that vfx and post houses will concentrate much more on the artistry of their work and will outsource the heavy computational work. Now that we are seeing massive multi-player online games, the same thing will happen in the gaming industry. Its a simple matter of economics, but it will change the way we approach our work.

7. GPU power will change the post-production pipeline

This is an easy prediction because it has already started to happen: we will see increasing use of GPUs with shader technology to accelerate computation. Basically, the GPU is becoming a much more important part of the hardware. SpeedGrade is an excellent example of what this means not just for vfx or post-production, but for the whole filmmaking process. By taking advantage of shader technology, SpeedGrade allowed us to do preliminary color correction work in realtime, for example, with Catwoman. Beyond this, well see sophisticated uses of the GPU technology with the hardware acceleration of 3D rendering products such as RenderMan, mental ray etc.

Games like Enter the Matrix offer increasing cooperation between feature films and the games they inspire. Courtesy of Atari.

8. Movie/game conversion will become common

Over the next five years sharing assets with game developers will happen more and more. This exchange will happen both ways: for example, as vfx technologies advance and computational power increases, game developers will use more film style vfx. This will allow for more data exchange with movies. Similarly, we used a game technology called Havoc as a rigid body dynamic solver on the Matrix sequels. That allowed us to use a digital stunt double for particularly nasty knocks. We also used it to explode glass windows and for blowing up buildings in the rain fight at the end of Matrix Revolutions.

Crossover tools will become more common in the future for purely practical reasons, but that will have obvious implications for movie/game tie-ins. One day we may even see truly integrated parallel development of games and movies.

9. Digital film capture and workflow will become the norm

Not all cinematographers will be ready for this, but the convenience and flexibility of digital media mean it is coming. We are close to the point where the dynamic range of celluloid can be matched by digital technologies maybe less than five years. In fact, I believe that the current hesitation in embracing a completely digital workflow has more to do with economics than anything else. The cost of setting up an end-to-end digital pipeline is still too high for most facilities. But that will change dramatically.

10. Digital distribution will change the way movies are released

Once we have a real network-based digital distribution system in place, I expect we will see patching on the fly, much the way software is currently updated on a more-or-less ongoing basis. Unless union rules or regulations expressly prohibit it, we will see ongoing enhancement to movies after their release and for the duration of their theater-run.

The field of visual effects will extend from post-production into post-release work! There are many who doubt this, but I expect that economics will prevail and we will see refinements from one week to the next. Most of this will probably be quite subtle, but if a movie isnt doing well initially, we may even see advertising for new elements, scenes, effects etc! I dont like this idea, but having recently added a new, almost full CGI scene to a movie five weeks before its release date, I expect it will happen nevertheless. Once we have the capability to patch movies on the fly, I just dont see us not using it.

Impressive vfx work is being done in Europe as displayed in films like Immortel. © 2004 Telema TF1 Films Production Ciby 200 RF2K Force Majeure Prods. Medusa Film.

11. Globalization of VFX will have an unexpected price

I have mixed feeling about this, but given the cost of feature film work, it is inevitable that studios will be sending more of their work overseas to Asia and other regions where labor costs are lower. Of course, I am pleased to see a global sharing of the wealth, but I am concerned about the impact this may have on the local industry. We may see a brain drain of our own talent and capabilities, as much to other industries as to overseas facilities.

We cant forget that for all the physics and technology that vfx works builds on, at the end of the day this is artistic work. It can be very difficult to communicate clearly across continents, time zones and languages. And its not just one-way communications, say from the director to the artists: Vfx work is an ongoing conversation back and forth between everyone involved. The best work is done when people are in the same physical environment.

12. VFX work will change fundamentally from emulation to simulation

The biggest revolution in vfx work will be a change in how we go about creating the effect in the first place. Rather than recreating the appearance of things digitally, we will more and more be able to recreate the physics of movement, light etc. That data will be more powerful and inherently more flexible. Since it is three-dimensional information, directors and artists will have the freedom to adjust the angle of a shot, move in and out etc.

I am conscious in all my work of an odd parallel between the rapidly developing capabilities of my industry and the concept of The Matrix: a virtual world, a complex simulation of the real world. The story of the Matrix is, of course, is a parable about larger existential questions, yet in the technology of my industry there is an element of overlap.

Given enough time and money, there is nothing that cannot be represented on film now. We have barely scratched the surface of what we can do with our technologies today. Its not just about spectacle; its about being able to visualize the unseen.

This is very exciting work to be involved in and I am confident we will see significant developments in how we work and what we are able to do not just one day, but in the next few years.

Kim Libreri is a veteran visual effects supervisor and founder of ESC Ent., which created the vfx for The Matrix sequels and Catwoman. Libreri recently joined Industrial Light & Magic. He has worked on the technology side of the industry for more than 12 years and started his film career as a senior software engineer at the Computer Film Co. in London. He later worked at Cinesite Europe as head of technology and was one of the original founders. The Academy of Motion Picture Arts and Sciences honored Libreri and his Matrix team with a Technical Achievement Award for the development of a system for image-based rendering allowing choreographed camera movements through computer graphic reconstructed sets.